Imagine you’re about to pick a movie to watch at a sleepover with your friends. Or maybe you’re deciding which local spot would be the best for a group hangout. Now, imagine if you had to make that choice not just for you and your friends, but for your entire school or even your town. The stakes suddenly rise, don’t they?

Now, let’s add another layer. Imagine you have some insider info—data that tells you which movie most teens love, or which hangout spot is trending among high schoolers. It seems a bit easier to decide, right? But here’s the catch: what if that data is biased? What if it’s influenced by unfair factors or doesn’t consider everyone’s preferences?

Welcome to the world of data interpretation, where these tricky questions need to be answered before any decision is made 🧐. You’re not just interpreting data. You’re also a steward. Your decisions, influenced by this data, could shape the experiences of countless others 🙌.

Why Do We Need Data Ethics?

Let’s imagine this: you’re on the school volleyball team, and your coach decides on the team lineup based on players’ speed, height, and strength statistics. But what if the equipment used to measure these statistics was faulty? It might lead to a star player being benched and a critical game being lost.

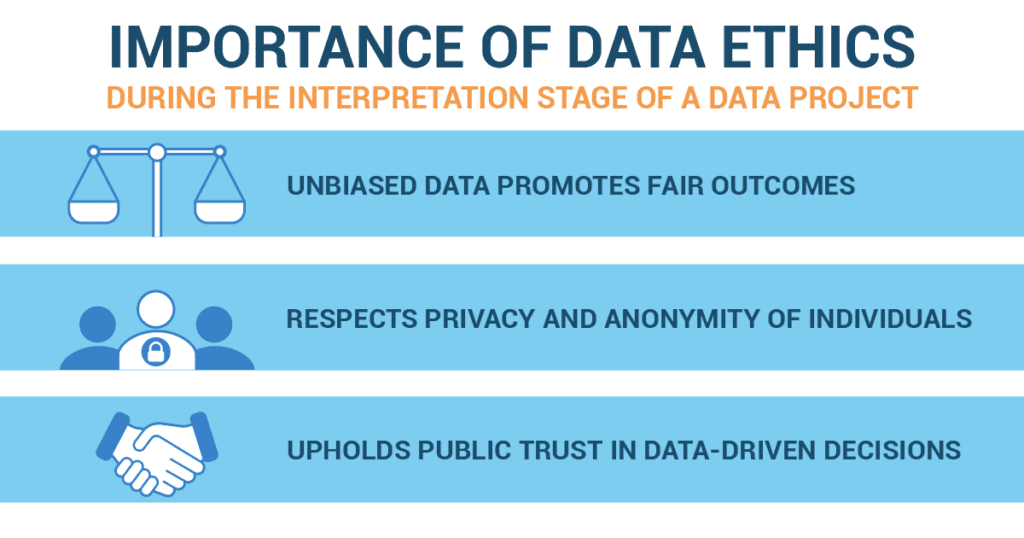

There are a number of things to keep in mind when considering data ethics.:

- The conclusions drawn from a dataset directly influence decisions that can have significant societal impacts. If data is misinterpreted or biases are not accounted for, these decisions can lead to unfair or harmful outcomes.

- Interpreting data, especially in sensitive areas, requires respect for privacy. The data should be interpreted in a way that does not compromise the privacy and anonymity of individuals.

- Responsible data interpretation encourages transparency and accountability, maintaining public trust in data-driven decisions.

How to Apply Data Ethics

Applying data ethics when interpreting data is a bit like using a safety helmet while cycling. It protects us and those around us. Here’s how you do it:

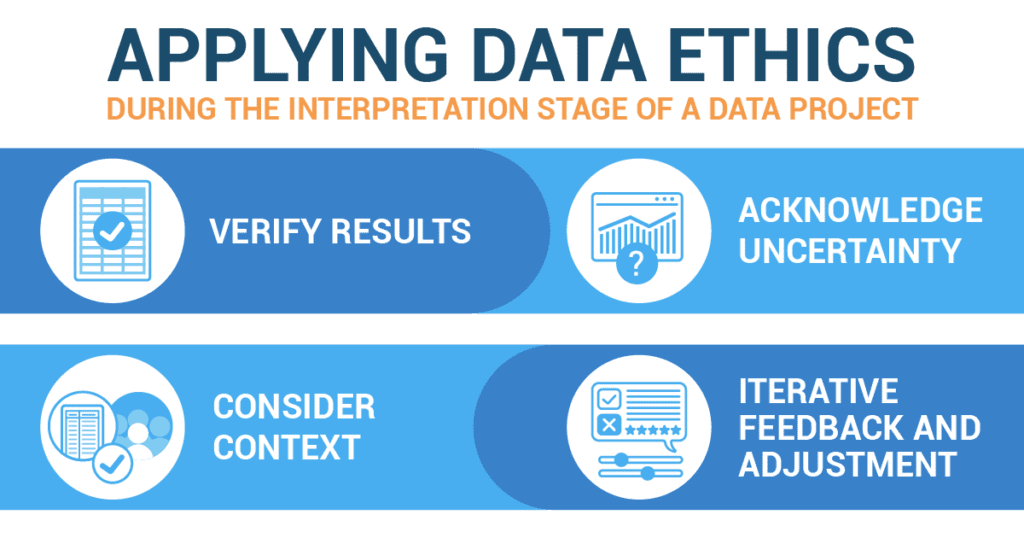

- First, verify the results. It’s like a ‘double-check’ system. When your friends tell you about a new, awesome burger joint, you’d probably check a few more reviews before trying it out, right? Similarly, always cross-check your data with different techniques or datasets to make sure it’s reliable.

- Next, always acknowledge uncertainty. Think of it like the weather forecast. The news tells us there’s a 70% chance of rain. They’re acknowledging that their prediction might not be perfect. In the same way, be transparent about where your data might have limitations or potential errors.

- Don’t forget to consider the context. Suppose you’re deciding on the best time to hold a school event. You wouldn’t just look at your own schedule, right? You’d consider everyone else’s schedules, too. The “Fairness, Accountability, and Transparency in Machine Learning” (FATML) framework advises us to consider the context in which the model will operate and to consider possible disparate impacts on different demographic groups. So, you think about who your data affects and how it affects them.

- Lastly, make room for iterative feedback and adjustment. This means monitoring the effects of your model, listening to others’ feedback about your interpretation of the data, and being willing to adjust it. Data scientists should not just ‘fire and forget’ their models but should be responsible for their ongoing impact. Like in a video game, you learn from your mistakes, level up, and keep going!

Common Mistakes in Data Ethics

Beware of some common mistakes!

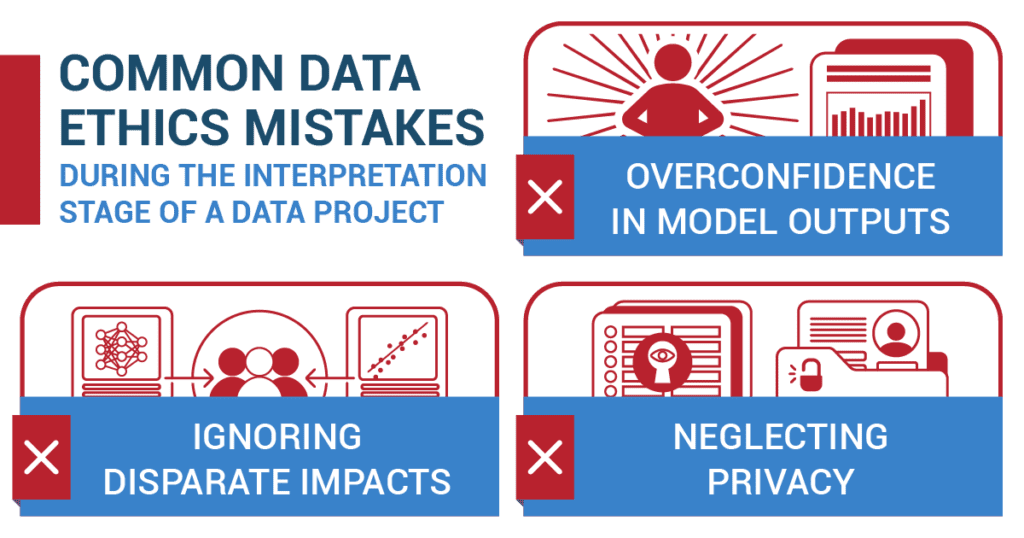

- Overconfident in your model outputs.

- While it is important to ground decision-making on analysis, it is also important not to become over-reliant on those outputs.

- Countermeasure: Always consider model outputs as probabilistic and uncertain, disclosing and considering potential errors and limitations. Remember, data is like the weather forecast; it’s not always 100% accurate.

- Ignoring disparate impacts

- Unfair outcomes and discrimination can occur if you ignore the impact on different groups.

- Countermeasure: Use techniques such as disparate impact analysis or fairness metrics to identify and mitigate potential biases in model outputs. It is like when you organize a party, you need to make sure there’s food that everyone can eat. Some might be vegetarians; some might be allergic to peanuts. Similarly, make sure your data interpretation is fair to everyone.

- Neglecting privacy

- Unintentional disclosure of sensitive information during the interpretation stage can violate privacy rights.

- Countermeasure: Make sure you protect the sensitive information in your data by using privacy-preserving techniques, such as differential privacy, and adhere to data minimization practices to ensure only necessary data is used and disclosed.

Data ethics might sound a bit tough, but remember, it’s like playing a video game. Learn the rules, respect them, and you’ll not only have fun, but you’ll also become a data superstar! So, let’s get ready to decode the world of data together. Happy investigating!

Rocking the Data Stage: A Symphony of Data Ethics in High School

In the bustling high school of Lincoln Park, 16-year-old Alex was known for two things: his incredible knack for statistics and his unending love for music. One day, he decided to merge his passions and began a project that sought to understand the most popular music genres among high school students. Alex’s goal was to propose a lineup for the upcoming school talent show that would appeal to the majority.

Alex surveyed his fellow students and compiled a rich dataset, but he knew that the raw data was only the beginning. The real challenge was ensuring he interpreted this data in an ethical manner.

He started by verifying his results. Aware that self-reported data could be biased, he cross-referenced his survey with other data, such as the most-streamed genres in the school’s music club. His findings were consistent, indicating that his survey was relatively accurate.

Still, Alex acknowledged the uncertainty in his data. He had taken a representative sample, but he knew he couldn’t capture every student’s preference. He communicated this when he presented his preliminary findings to the talent show committee, ensuring they understood the limitations.

Then, he pondered the context. Would the selection of only popular genres sideline talented students who excelled in less mainstream genres? Concerned about the potential disparity, Alex used the Fairness, Accountability, and Transparency in Machine Learning (FATML) framework to weigh the potential impacts. He decided to propose a lineup that balanced popular appeal and diversity, giving everyone a chance to shine.

Once the talent show was announced, Alex didn’t just sit back and relax. He actively sought feedback about his interpretation and its real-world effects. Did the students feel the show represented their preferences? Was there a genre that he missed? Based on this feedback, he made adjustments to his interpretation for future events.

However, Alex’s keen sense of data ethics didn’t stop there. In his original survey, he had collected data on individual students’ favorite artists and songs. But when sharing his findings, he only discussed aggregated trends to ensure he did not inadvertently reveal anyone’s personal music preferences.

The talent show was a resounding success, and Alex’s careful, ethical interpretation of data played no small part in that. Through his love for statistics and music, Alex rocked the data stage, hitting all the right notes of data ethics. His performance was a reminder that correctly applying data ethics doesn’t just create accurate data projects; it creates harmonious communities as well.