Consider you’re assembling a jigsaw puzzle. You’ve spent hours on it, placing each piece meticulously. The picture is almost complete – scenic countryside with lush fields, a blue river winding through, and a vibrant sunset. Yet, there’s one piece missing – that crucial bit that completes the sun. How would the picture look? Incomplete.

Life, as you see, revolves around completeness, and so does the realm of data science. Just as the missing puzzle piece leaves your image unfinished, missing or incomplete data can drastically alter the insights we derive, leading to potentially faulty decisions and strategies.

Today, we’re diving into the fascinating world of exploring data completeness. We’ll see why it’s more than just filling in the blanks, but instead, it’s about creating a complete picture that enables us to see patterns, make informed decisions, and, most importantly, understand the world around us in a more profound way.

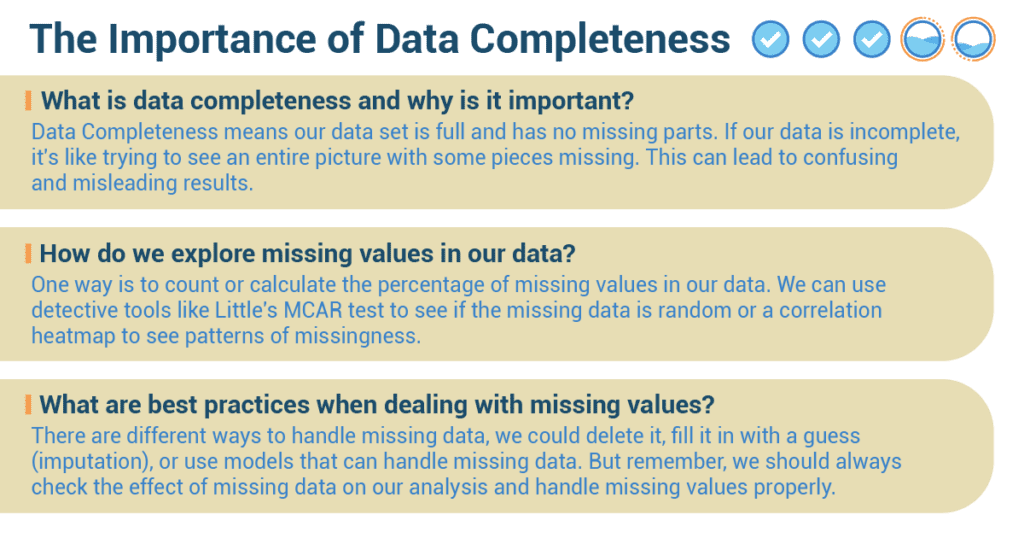

What is data completeness, and why is it important?

- If our data is incomplete, it’s like trying to see an entire picture with some pieces missing.

This can lead to confusing and misleading results. Think about how you felt when you didn’t know the final score of your soccer game! - Incomplete data can make machine learning models not work well.

In the tech world, there are smart machines that learn from data, called machine learning models. These models could get confused and make mistakes if they come across missing values, just like how you would make a mistake if you didn’t know the full instructions for a homework assignment. These machine learning models might not work as expected when they come across missing values, leading to wrong or not the best predictions. - Incomplete data can make our preprocessing steps tricky.

Preprocessing is like getting our data ready before we use it for different tasks. But when our data has missing parts, it can make the preparation steps a bit harder. Deciding how to deal with missing values can influence the outcomes of our entire analysis. - Missing data can affect how we understand the distribution and relationships in our data.

For example, if we have missing information about people’s ages in a survey, it might make it difficult to accurately analyze the age distribution and its impact on other variables like income or education level. This can lead to incomplete or misleading insights.

How do we explore missing values in our data?

- Identifying missing values.

The first step in tackling missing values is finding them. It’s like playing detective in a mystery game. We have cool techniques to help us with this:- Numeric: One way is to count or calculate the percentage of missing values in our data, just like how you might count how many questions you got wrong on a test.

- Visual: We can also use visuals like a matrix or a heatmap (a color-coded chart), just like how you might use a color-coded schedule to see which periods you have for each subject.

- Understand why values are missing.

We also need to figure out why values are missing. Sometimes, it’s random, and sometimes, it’s related to other data. We can use detective tools to help us:- Numeric: Tests like Little’s MCAR test help see if the missing data is random.

- Visuals: A correlation heatmap helps identify patterns of missingness.

What are best practices when dealing with missing values?

- When dealing with missing values, it’s important to understand the context, like knowing the rules of a game.

The effect of missing data depends on the context. If key variables have lots of missing values, the analysis may not be accurate. We always need to understand the importance of variables with missing data. Without understanding the context, it’s hard to decide how to deal with missing data.- For example, if you’re missing the scores of key players in your game, the final result might not be accurate. Similarly, we need to understand the importance of missing data in our analysis.

- Deal with Missing Data Properly.

There are different ways to handle missing data, just like there are different ways to handle a forgotten work assignment. We could delete it, fill it in with a guess (imputation), or use models that can handle missing data. The choice depends on why and how much data is missing and what we’re trying to find out.- Imagine you have collected data about your friends’ favorite colors, but some coworkers didn’t provide their color choices. To handle the missing data properly, you could fill in the missing colors based on what other officemates like or use a model to predict their favorite colors accurately, depending on how much data is missing and what you want to find out.

- Don’t Just Delete Missing Data.

A common mistake is to just delete any data with missing values without understanding why it’s missing. This could create bias or lose valuable information. Before deleting, we need to understand why the data is missing and consider other methods like imputation.- Suppose you conducted a survey about employees’ transportation to work, and some responses regarding transportation were missing. Instead of just deleting those responses, you need to understand why that information is missing. For example, some employees might not have answered because they walk to work or carpool, so you could impute those values based on the most common mode of transportation to avoid bias or losing valuable information.

- Don’t Ignore the Effect of Missing Data.

We should always check the effect of missing data on our analysis and handle missing values properly. Ignoring missing data is like ignoring a foul in a soccer game – it can lead to wrong or misleading results.- Imagine you are studying the relationship between employees’ sleep patterns and their work performance. If you ignore the missing data for some employees’ sleep hours, you might draw inaccurate conclusions. Just like a soccer referee should not ignore a foul during a game, you should handle missing data properly to avoid wrong or misleading results in your analysis.

In the realm of food manufacturing, Alex Carter, a seasoned corporate professional with a background in supply chain management, embarked on a journey that would shed light on the importance of data completeness. His exploration was driven by the need to understand how gaps in data could impact the efficiency and quality of operations in the food manufacturing industry.

Alex’s project was born out of a desire to optimize supply chain processes within a food manufacturing company. As a corporate professional well-versed in the intricacies of operations, he knew that accurate and complete data was the linchpin to effective decision-making. His journey was centered around exploring the extent to which data completeness influenced the company’s performance. Alex recognized that data completeness wasn’t just about having a dataset filled with numbers and figures; it was about having the right data available at the right time. Drawing from his corporate experience, he understood that missing or incomplete data could lead to inaccurate forecasts, delayed shipments, and even compromised product quality.

Armed with his understanding, Alex delved into the company’s data ecosystem. He sifted through production records, inventory logs, and supply chain reports. He soon discovered that data gaps were prevalent, ranging from incomplete shipment information to missing quality control metrics. His exploration highlighted that these gaps were often overlooked, leading to decisions made without a comprehensive view of the situation.

Drawing parallels from his corporate background, Alex recognized the implications of incomplete data on decision-making. He realized that the company’s inability to access complete and accurate data hindered its ability to forecast demand accurately, allocate resources efficiently, and maintain consistent product quality. These gaps directly impacted the company’s competitiveness and bottom line. Alex’s journey further unveiled the correlation between data completeness, supply chain efficiency, and quality control. He found that incomplete data made it difficult to track the movement of ingredients, leading to delays and disruptions in production. Moreover, missing quality control metrics made it challenging to identify trends or patterns that could indicate potential quality issues.

Armed with insights from his exploration, Alex adopted a solution-oriented mindset. Just as his corporate experience had taught him to identify bottlenecks and inefficiencies, he tackled data completeness gaps head-on. He proposed initiatives to streamline data collection processes, implement automated data-capturing systems, and foster a culture of data integrity. As Alex’s proposed initiatives took shape, their impact became evident. The company experienced smoother operations, reduced supply chain disruptions, and improved product quality. Alex’s case study exemplifies how a corporate professional’s commitment to data completeness can enhance efficiency and quality within the food manufacturing industry. Through his exploration, he underscores the critical role of complete and accurate data in driving effective decision-making and operational excellence.