Let’s imagine this scenario: You’re surfing the internet on a lazy weekend, searching for a fabulous new pair of sneakers. A few hours later, you start noticing pop-ups for sneaker sales, emails about discounts on similar brands, and even social media ads showcasing the latest sneaker trends. You pause and wonder, “How did they know I was looking for sneakers?” It’s all about data, my friends 📊. The websites you visit track your search history, and based on your online activities, they start offering personalized ads and discounts.

This type of scenario is more common than we realize in our digital lives. We exist in a data-driven universe where every search, every click, every online purchase, and even every meme we share is being collected, analyzed, and utilized. Our data shapes the virtual world around us, leading to personalized recommendations, targeted ads, optimized gaming experiences, and even better-curated playlists 🎶.

However, while data can be a powerful tool, like any tool, it can be misused. Remember that online shopping scenario? How would you feel if you found out your data wasn’t only used to provide targeted ads but also to predict and manipulate your behavior? Or perhaps used without your knowledge for purposes you never consented to?

The Importance of Data Ethics and Statistical Analysis

Imagine you’re a detective. You’ve got a pile of clues (or data) in front of you. Your job? To solve the case (or understand the data). But here’s the catch – not all clues are equal, and how you use them can change the story you’re telling.

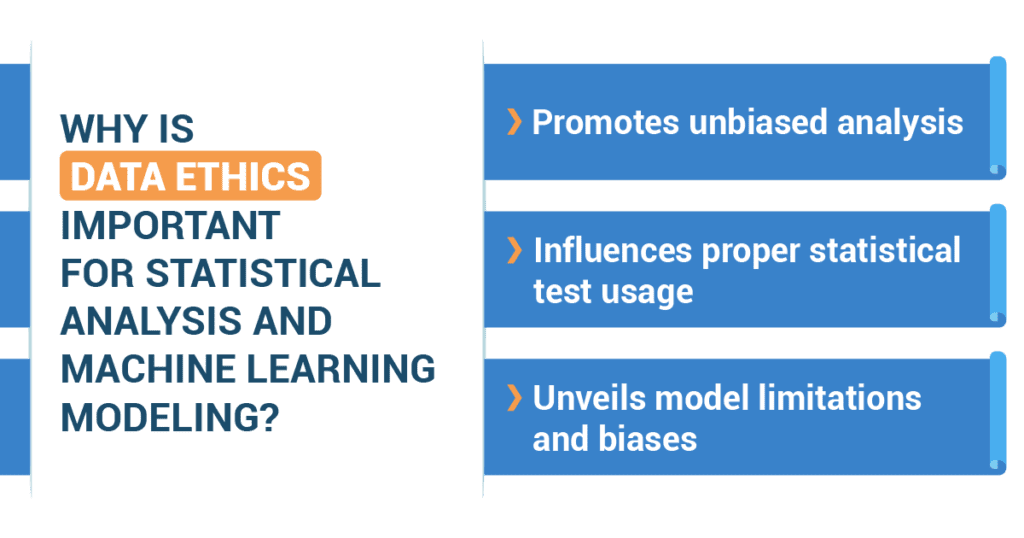

That’s where data ethics comes into play. It’s the guiding principle in your detective work, ensuring you don’t unfairly pick and choose clues that only support your pre-determined conclusions – kind of like picking only the sprinkles you like from a bowl of rainbow sprinkles. This is what we call “p-hacking” (where analysts cherry-pick results to support their hypotheses), a no-no in our detective world. Similarly, the choice of model or method can bias the analysis if it’s not appropriately aligned with the characteristics of the data or the problem at hand. For example, a survey is a great tool if you want to know people’s opinions on specific topics; it is not the best method if you want to understand the reasons underlying these opinions.

Data ethics can significantly influence how we prepare our models. If not conducted ethically, model evaluation may not fully disclose the limitations and potential biases in the model, leading to overconfidence in its predictions or conclusions.

Moreover, data ethics make sure you present your findings honestly, showing not just the strengths but also the weaknesses and biases of your data, methods, and conclusions. It’s like not just pointing out the suspect you believe is guilty but also discussing other potential suspects and evidence.

Apply Data Ethics when Performing Statistical Analysis and Machine Learning Modeling

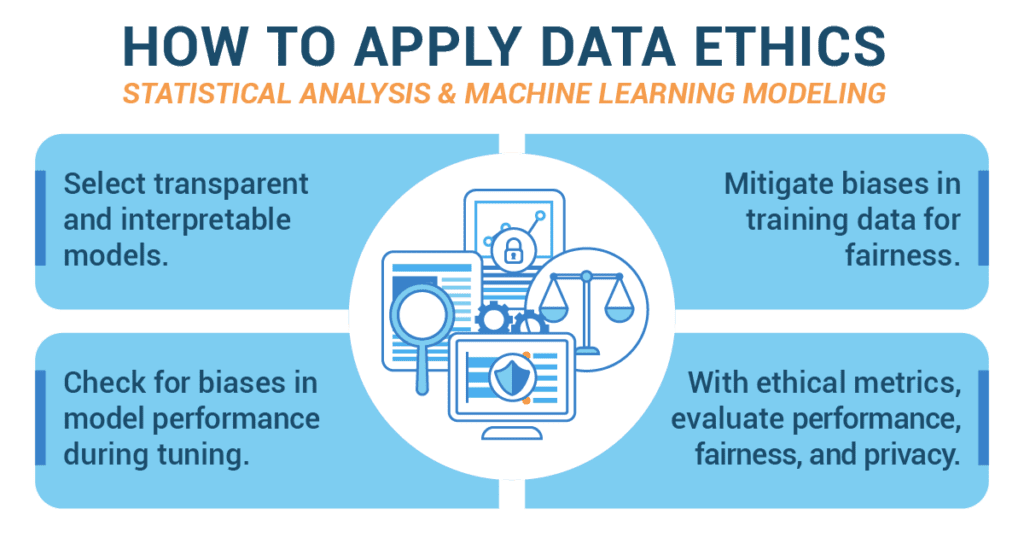

- Choose models that can provide transparency and interpretability.

Just like detectives follow a code of conduct, we data detectives also have a framework to guide us: FATML, which stands for Fairness, Accountability, and Transparency in Machine Learning. We have to choose our models and methods thoughtfully, ensuring they’re fair, explainable, and do not favor one group over another. Ensure that the selected models can be explained to the stakeholders.

- Ensure fairness by critically analyzing and processing the training data to mitigate any existing biases.

There are cool tools available, like IBM’s AI Fairness 360 or Google’s TensorFlow Privacy, to help us along the way. These tools help us examine our evidence (or data) and ensure it’s not biased. They can also help us maintain the privacy of the people behind the data – just like detectives protect their informants’ identities.

- During tuning, it’s crucial to check again for biases in the model’s performance.

Checking for bias is an ongoing process for ML models; researchers use techniques like differential privacy to monitor their models and protect the privacy of individuals in the dataset. Just like a detective would review the facts before presenting the case, we check our models’ performance for biases.

- Use metrics that take into account not just model performance but also ethical considerations like fairness and privacy.

Evaluate the model across different demographic groups to ensure it performs fairly for all groups. The Ethical Matrix is a framework that allows for systematic consideration of different groups affected by the model.

Common Data Ethics Mistakes and Countermeasures for Statistical Analysis and Machine Learning

Even the best detectives can make mistakes.

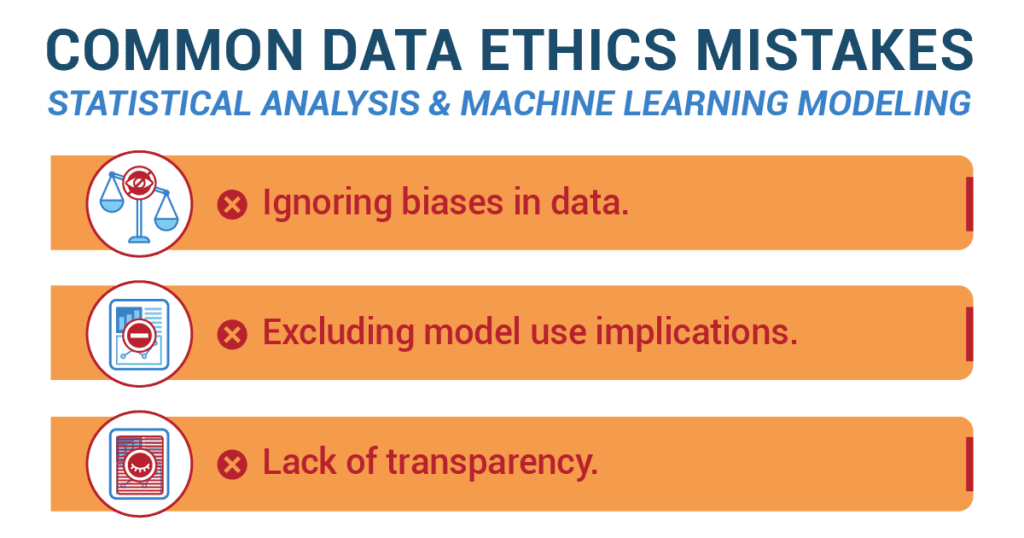

- Ignoring biases in data

- They might focus too much on one clue and miss other important pieces of information. Similarly, in data analysis, we may ignore biases in our data, leading to unfair conclusions.

- Countermeasure: To fix this, we perform a thorough bias audit of the dataset and mitigate biases where possible through techniques like re-sampling or re-weighting.

- Not considering the implications of model use

- Failing to consider how the model will be used can lead to harmful outcomes, especially if the model is used in high-stakes decisions.

- Countermeasures: Engage in a thorough assessment of the potential impacts of the model, including consulting with affected communities if possible.

- Lack of transparency

- Models that are too complex to understand can create mistrust and make it difficult to identify when they’re making unfair or incorrect predictions.

- Countermeasure: Prioritize transparency and interpretability in model selection. Use tools like SHAP (SHapley Additive exPlanations) to help explain complex models.

So, my young data detectives, are you ready to solve the mysteries of data ethically? Remember, we must respect the clues, tell the complete story, and always consider the potential impacts of our conclusions on the communities around us. Happy investigating!

A Home Run for Data Ethics: Sasha’s Sports Statistics Showdown

High school junior Sasha had always been an avid sports enthusiast, her love for baseball second only to her passion for data science. So, when her statistics teacher announced a data project where students could pick their own topics, she saw the perfect opportunity to blend her passions: a comprehensive analysis of baseball performance statistics.

High school junior Sasha had always been an avid sports enthusiast, her love for baseball second only to her passion for data science. So, when her statistics teacher announced a data project where students could pick their own topics, she saw the perfect opportunity to blend her passions: a comprehensive analysis of baseball performance statistics.

Eager to impress her peers and her teacher, Sasha decided to go the extra mile. She wanted to predict the performance of players for the upcoming season, applying machine learning models to historical statistics she obtained from various baseball databases online.

However, Sasha was aware of the ethical implications of her ambitious project. She knew that while the power of data was immense, it came with responsibilities. She had to ensure that her analysis was not only effective and insightful but also fair and ethical.

In her initial analysis, Sasha realized that player salaries had a significant influence on the model’s predictions. Higher-paid players were typically predicted to perform better. Sasha questioned if this was ethically fair. After all, higher salaries could reflect many factors, such as marketing appeal or seniority, which weren’t directly related to athletic performance. Recognizing this potential bias, Sasha chose to exclude salary data from her model to ensure a fair analysis based solely on athletic performance metrics.

Additionally, she discovered that her model predicted lower performance for players from certain countries. Upon examining her data, she realized it was incomplete for these regions. Sasha knew that it was unfair to make predictions for these players based on faulty data. She decided to either find more complete data or exclude these players from her analysis, ensuring her project did not perpetuate unfair biases.

As Sasha prepared her machine learning model, she also kept transparency in mind. She knew her classmates weren’t all data whizzes, so she chose a model that was easy to understand and explain, even though more complex models could have slightly improved her prediction accuracy.

Once she completed her project, she shared her findings with her classmates and teacher, pointing out not just her conclusions but also the ethical choices she made throughout the process. She highlighted the potential biases she found, the steps she took to mitigate them, and the importance of transparency and interpretability.

In doing so, Sasha didn’t just deliver an excellent project; she also shared a valuable lesson with her classmates – that data science isn’t just about accuracy and efficiency. It’s also about ensuring fairness, respecting privacy, and practicing transparency. By placing data ethics at the heart of her project, Sasha didn’t just hit a home run with her analysis; she also scored a victory for ethical data practice.